State of AI Photojournalism Report 2025

We're still at a stage where the produced stories are simulated, but as we can see on this site, things progress very quickly. We've experimented with new approaches this year again and wanted to share things we found interesting.

- every year, we modernize our approach to automate our processes as much as we can, and get the most of AI. This is very unproductive, as we constantly hit limitations of nascent approaches, but trying** to automate everything is a good way to 1 learn and 2, have a preview of things might works in the future (once we iron all the bugs and smoothen the process)

Executive Summary

This year's experiments revealed both impressive capabilities and persistent limitations in AI's attempt to simulate photojournalism.

1. Orchestration and Language Models

1.1 Agents are the new trees

This year marked our transition away from tree-based generative workflows toward more agentic approaches. Instead of rigid, predetermined paths, our AI agents now have access to tools and can make decisions about how to approach each story. The addition of web search capabilities fundamentally changed how agents research and develop narratives. Unlike fixed pipeline, agents can plan tasks and invoke tools on their own.

we simply asked it to generate 30 stories based on 2024 key events and themes, We've really under-used our new agent. But we have big plans for the future, and laying these foundations will help move quickly toward systems that are way more autonomous and proactive. As it is now, our agent could research topic online, generate ideas on its own and write up article. It can edit content, generate images , adjust the style of articles, or produce 5 articles daily on trending tweets. Our agent is actually quite similar to how Raccook's cooking blog is created (but except of generating new stories, it's about a cooking raccoon ).

1.2 The Article Generation Pipeline

Generating those 30 stories was still too much to ask for a single job to the best model. Even by chopping up the tasks, it was still quite prone to crashes. Similarly to how unstable the early pipelines using GPT3 were, the new agentic pipeline required many adjustments and nudges along the way. We had to kick it back on track a few times when it failed or just gave up on tasks. To keep the context size in check, we divided the generation in 3 major phases. The instruction of these 3 phases were known by the agent, but it'd focus on each parts one by one, saving plans and working douments to a research folder.

- Deep research, with web searches, to uncover key events and themes of 2024. This one-shot task took a while, but pretty accurately summarized of key events of 2024.

- Brainstorm and evaluation sub-processes, for each theme, the agent had to come up with 3 solid photojournalism story angles, then a jury of 5 fictional judges would comment on the ideas, and a summarization would be completed for the final phase. We've used similar brainstorm technique since the beginning and found that most of the time, that for LLMs too, the best ideas aren't always the first ones that come to mind. Simply asking a LLM to generate 3 options, rate them on some criteria and reflect, will be sufficient to greatly improve output quality. This reasoning process is now internalized in many models since Deepseek had outperformed major models with a much smaller model and budget.

- Article write up. Based on the brainstorm document's selected idea, the article and all its content is written from scratch by an agent that can call tools.

1.3 Quality Issues

While the agentic approach brought new capabilities, it also introduced new challenges. Context management remains problematic, and we discovered that process-following agents sometimes struggle with creativity, defaulting to safe, predictable choices rather than taking artistic risks. I assume this is related to the temperature setting of task-optimized models, and wonder if either sub-agents or a new kind of model architecture that has dynamic temperature level would be viable solution.

2. Celebrating weird images

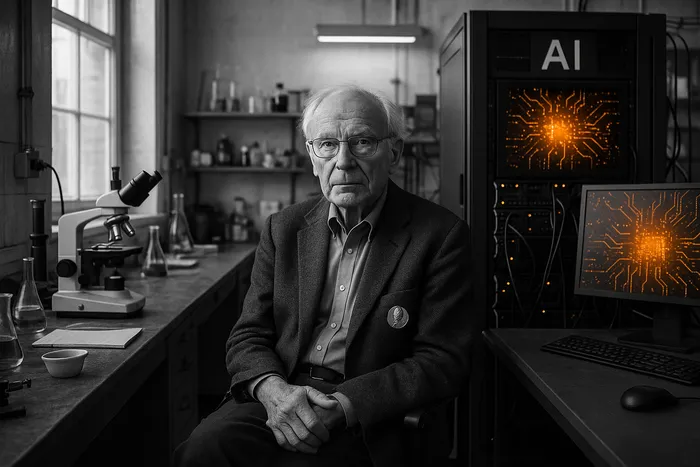

2.1 Current State of AI imaging

Photojournalist AI agents could source photos from image libraries, google street-view, instagram or other crowdsourced repository, but it still lacks agency to do so. While the prospect of cyborg photographers walking around with their DSLR is absurd, we could easily envision an algorithm sorting security camera and social media footage to find good images of real events, or even reconstruct an accurate depiction of it. We're not there yet.

We're still currently relying on diffusion models available via API, but they got good. Previously, we used midjourney for previous versions, but the model was only available as a Discord bot, so it wasn't really viable for automation. We had automated most of the pipeline, but lack of API availability was a real bummer. Stable diffusion and Flux models have been impressive open-source progress, but the new OpenAI image model, now available via API really made a new level of visual quality available in automations.

2.2 Bad Prompts, Bad Images

The lack of creativity in some LLM mentionned earlier become pretty visible asking the model to plan photo ideas. The model generated many safe and obvious visual descriptions, losing the artistry and singular approaches that were more visible in the 2023 series. We've been served many bland, often absurd, stock-looking images.

2.3 The love for Selective Color

In its 300 lines instruction of the agent, the section about how to compose visual photo description might have pushed the model to generate specific styles images. The instruction initially mentioned to "provide details around composition, color treatment, tones and…", and statistically, models with a low temperature like tool-calling agents, love to settle on the first probable choice. And the most obvious color treatment the agent was able to think about was "selective color" and "warm tones".

Many of first few projects were going for this style, and it was so terrible awful that we had to unplug the machine and adjust the instruction a bit. Combined with the AI-judge groupthink effect (there was always a judge who thought selective color would bring a spark of color in the boring black and white photos), we ended up with a feedback loop reinforcing the worst photographic clichés. The selective color treatment—that tired effect where everything is black and white except for one dramatic red rose or yellow taxi—became the default "artistic" choice.

We adjusted the instructions to avoid explicitly mentioning specific color treatments, instead focusing on the emotional intent and narrative purpose of the visual choices. We also tweaked the judge instructions to value originality over conventional "prettiness." Without being too prescriptive, we still needed to adequately capture the archetypes of photojournalism.

This revealed how AI systems, when given vague artistic direction, will gravitate toward the most statistically common interpretations in their training data. "Color treatment" to an AI means the most overused Instagram filters. The models don't understand that good photojournalism often means restraint—that sometimes the most powerful choice is no treatment at all.

2.4 Lack of Real World Understanding

The diffusion models really improved, it's shocking. But they still struggle to render realistically some things. Image diffusion models don't think too much about the scene and will often lazily try to tick boxes in their neural nets by inserting absurd elements.

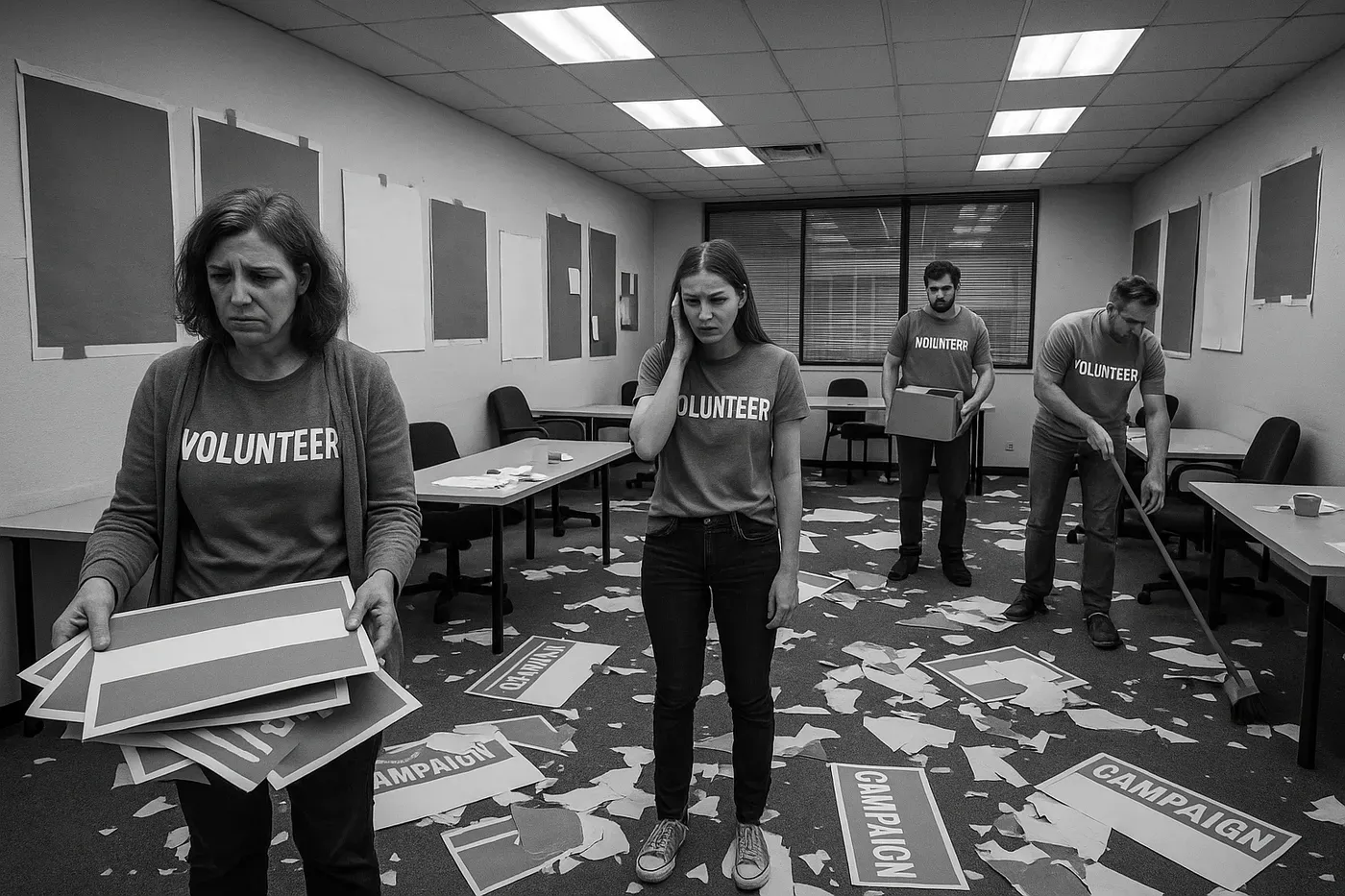

2.4.1 Literal Interpretation

Like "campaign volonteers" or protesters wearing "PROTEST" hats written. The models understand that protests involve signage and slogans, but fail to grasp the actual content and context of real protest movements.

2.4.2 Documents and Screens

One repetitive mistake of diffusion models was with text documents and phone screens. They render screens with impossible interfaces, documents with nonsensical text, and digital displays that violate basic principles of how technology works.

2.5 Managing faces

Some concepts are harder to grasp for image models, and would require more thorough explanation before being handed to a model. The way embedding model like CLIP works, seems like it tends to converge features in the embeddings. For example, when there are multiple people in one photo, they then to have similar pose or outfits. If there are multiples person in one image, they'll often share similar features for for really no reasons. This is likely due to the way the target point is encoded in the bimodal (text+image) vector space.

Inversely, the model isn't natively able to depict subjects consistently between photos just from text descriptions. Because the models have been trainted to recorgnize their imags, using celebrities names work to get more consistent results. An early ai-artist even created a comic using a Zendaya looking charachter to solve this problem. One better, albeit hacky, solution to this problem is be to generate the cast, like a charachter sheet, and reference it in other photos. This strategy isn't well supported and even neutral images can inform unintended stylistic feature (like a white background if the charachtersheet has a white BG). We haven't tackled this issue this year. Perhaps a new generation of text2image model architechture will improve these cases.

3. Publishing the website

3.1 Technical Implementation

It's not very exciting, but rebuilt the website from scratch around agent-driven content pipelines. We worked with the repo.md framework. It allows publishing of MD content (a very natural output format for LLMs). The website shell is now rendered on the edge (close to you, anywhere in the world), with content that is automatically generated from markdown files in the repository. As new files are added, the website evolves automatically.

posturing

Ai acceptance We felt it was more disruptive to label it clearly.

4. Current Limitations and Future Directions

4.1 Immediate Improvements

4.1.1 Multi-Agent Systems

- Delegating processes to different models with adjusted temperatures

- Specialized agents for creative versus analytical tasks

- Better context management through distributed processing

4.1.2 Enhanced Research Capabilities

- Real world alternatives to web search

- Agent autonomy for conducting and contacting people

- Moving from pure generation toward curation of existing content

4.2 Long-term Vision

4.2.1 Presence and Autonomy

The future might include autonomous agents that produce news stories throughout the year, with judges selecting the best ones at year's end. This could involve:

- Tele-presence through robotic systems

- Scraping smart-glasses footage for street-level documentation (similar to Jon Rafman's 9eyes project using Google Street View)

- Creating a form of mediated observation that approaches real photojournalistic presence

4.2.2 Project Stewardship

Currently driven by a single soul, the project could benefit from a more distributed approach to governance and creative direction.

Conclusion

The 2025 experiments revealed that while AI systems have become remarkably capable at mimicking the surface forms of photojournalism, they still fundamentally misunderstand the essence of the craft. From selective color disasters to impossible phone screens, these failures aren't just technical limitations—they're windows into how AI perceives and reconstructs human creative practices. As we continue this experiment, the gap between simulation and reality remains our most fertile ground for discovery.